Voice-Controlled Smart Home with Edge AI - No Cloud Needed | M5Stack @ CES 2026

-

Hey Makers! 👋

We just wrapped up our demo at CES 2026, and folks have been asking: "Wait, this entire smart home runs offline? Like, FULLY offline?"Yep. No cloud. No API calls. No internet dependency. Everything—from natural language understanding to real-time computer vision—runs locally on M5Stack hardware. Let me break down how we built this thing. 🛠️

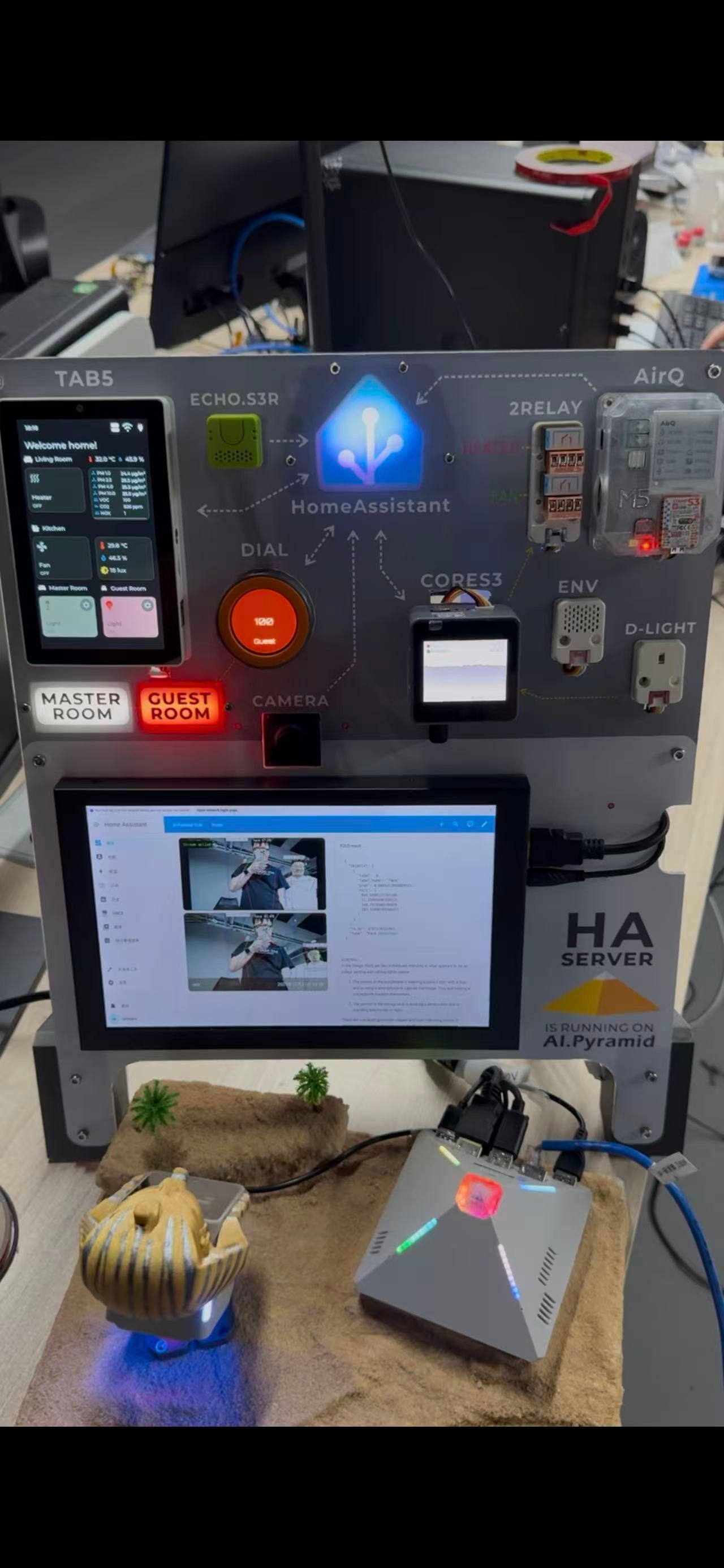

🧠 The Brain: M5Stack AI-Pyramid as the Edge Server

Product: AI-Pyramid (AX8850)

Role: Local Home Assistant ServerThis little powerhouse is the compute hub of the entire system. It's running a full AI stack—completely offline:

LLM (Large Language Model): Qwen 0.5B for natural language understanding

ASR (Automatic Speech Recognition): SenseVoice for voice-to-text

VLM (Vision-Language Model): InternVL3-1B for real-time visual scene understanding

Computer Vision: YOLO for person detection and skeleton tracking

Why this matters:

Traditional smart homes rely on cloud APIs (Alexa, Google Home, etc.). If your internet drops, your "smart" home becomes dumb. This setup? Zero external dependencies. All inference happens on-device.👁️ The Sensing Layer: Real-Time Environmental Data

Product: CoreS3 (Gateway) + Multiple Sensors

Display: TAB5 (Control Panel)We connected a bunch of high-precision sensors to a CoreS3 gateway, which feeds real-time data into Home Assistant and displays it on a TAB5 touchscreen dashboard:

AirQ Unit: Air quality monitoring (PM2.5, CO2, etc.)

ENV Unit: Temperature & Humidity

D-LIGHT Unit: Light intensity (for automations like "turn on lights when it gets dark")

Automation Example:

When D-LIGHT detects low ambient light → Automatically trigger room lights.🎛️ The Control Layer: Touch, Dial, Voice, and Automation

Products: M5 Dial, TAB5, CoreS3We built three control methods:

- Physical Control: M5 Dial (Smart Knob)

Rotate to adjust brightness.

Click to toggle on/off.

Physically wired to Master/Guest Room lights + status LEDs. - Visual Control: TAB5 Touchscreen

Real-time sync of all device states.

Tap to control any light, fan, or sensor. - Automation Logic

Home Assistant rules: "If air quality drops below X → Turn on air purifier."

Sensor-driven actions without manual input.

🗣️ The Magic: Offline Natural Language Voice Control

This is where it gets fun.

Most smart home voice assistants (Alexa, Siri) require cloud processing. We wanted something fully offline that could handle messy, real-world commands.

What the LLM Can Do (Examples from CES Demo):

CAPABILITY

DEMO COMMAND

WHY IT'S HARD

Fuzzy Color Semantics

"Make the bedroom light coffee-colored"

Non-standard color names require semantic mapping.

Multi-Device, Single Command

"Turn on the fan and make the guest room green"

One sentence, two actions, different device types.

Global Aggregation

"Turn off everything in the house"

Needs to understand entity groups.

Sequential Logic

"Set all lights to warm, then turn on the heater"

Chain-of-thought reasoning (CoT).Trade-Off: Speed vs. Capability

Because we're doing pure edge inference (no GPU clusters in the cloud), processing time scales with command complexity:Single device command (e.g., "Turn on the living room light"): ~1-2 seconds

Multi-device command (e.g., "Turn on the fan and set the bedroom to blue"): ~3-4 seconds (+1s per additional device)

Is it slower than Alexa? Yes.

Does it work when your router dies? Also yes. 😎🤖 Bonus: StackChan (The Face of the System)

Product: StackChan (ESP32-based desktop robot)We paired the AI-Pyramid with StackChan, a cute desktop robot that acts as the physical interface for the system. It can:

Display visual feedback during voice interactions.

Show real-time CV detections (e.g., "Person detected in hallway").

Act as an interactive "mascot" for the smart home.

(Left side of the demo photo: That's StackChan. Right side: The AI-Pyramid brain.)💬 Discussion

Q: Why not just use Home Assistant + Alexa?

A: Privacy, reliability, and no subscription fees. Plus, this is way more hackable.Q: Can I build this myself?

A: Absolutely. All the hardware is off-the-shelf M5Stack gear. The AI models are open-source (Qwen, SenseVoice, InternVL3, YOLO). We'll likely open-source the integration code soon.Q: What about latency?

A: For simple commands, it's nearly instant. For complex multi-device commands, expect 1-2 seconds per device. We're exploring optimizations (model quantization, caching).📌 Resources

📚 Docs: https://docs.m5stack.com

🗣️ Forum: https://community.m5stack.com

🛒 Shop (AI-Pyramid, CoreS3, TAB5, M5 Dial): https://shop.m5stack.com

Note: This demo was showcased at CES 2026. Shoutout to the engineers who made this work on a tight deadline. 🙌 If you were at the booth, drop a comment! - Physical Control: M5 Dial (Smart Knob)